Real-time Monocular Full-body Capture in World Space via Sequential

Proxy-to-Motion Learning

Yuxiang Zhang1, Hongwen Zhang1*, Liangxiao Hu2, Hongwei Yi3, Shengping Zhang2, Yebin Liu1* (* - corresponding authors)

1Tsinghua University

2Harbin Institute of Technology

3Max Planck Institute for Intelligent Systems

Fig 1. We demonstrate that our real-time monocular full-body capture system can produce accurate human motions with plausible foot-ground contact in world space.

Abstract

Learning-based approaches to monocular motion capture have recently shown promising results by learning to regress in a data-driven manner. However, due to the challenges in data collection and network designs, it remains challenging for existing solutions to achieve real-time full-body capture while being accurate in world space. In this work, we contribute a sequential proxy-to-motion learning scheme together with a proxy dataset of 2D skeleton sequences and 3D rotational motions in world space. Such proxy data enables us to build a learning-based network with accurate full-body supervision while also mitigating the generalization issues. For more accurate and physically plausible predictions, a contact-aware neural motion descent module is proposed in our network so that it can be aware of foot-ground contact and motion misalignment with the proxy observations. Additionally, we share the body-hand context information in our network for more compatible wrist poses recovery with the full-body model. With the proposed learning-based solution, we demonstrate the first real-time monocular full-body capture system with plausible foot-ground contact in world space.

Overview

Fig 2. Illustration of the proposed method. Our method takes the estimated 2D skeleton poses of the body and hand as inputs and recovers the 3D motions with plausible foot-ground contact in world space.

Fig 3. Illustration of the proposed cross-attention for neural motion descent.

Results

Fig 4. Visualization of reconstruction results across different cases in the (a)3DPW, (b)EHF, and (c)Human3.6M datasets and (d,e,f)our captured sequences.

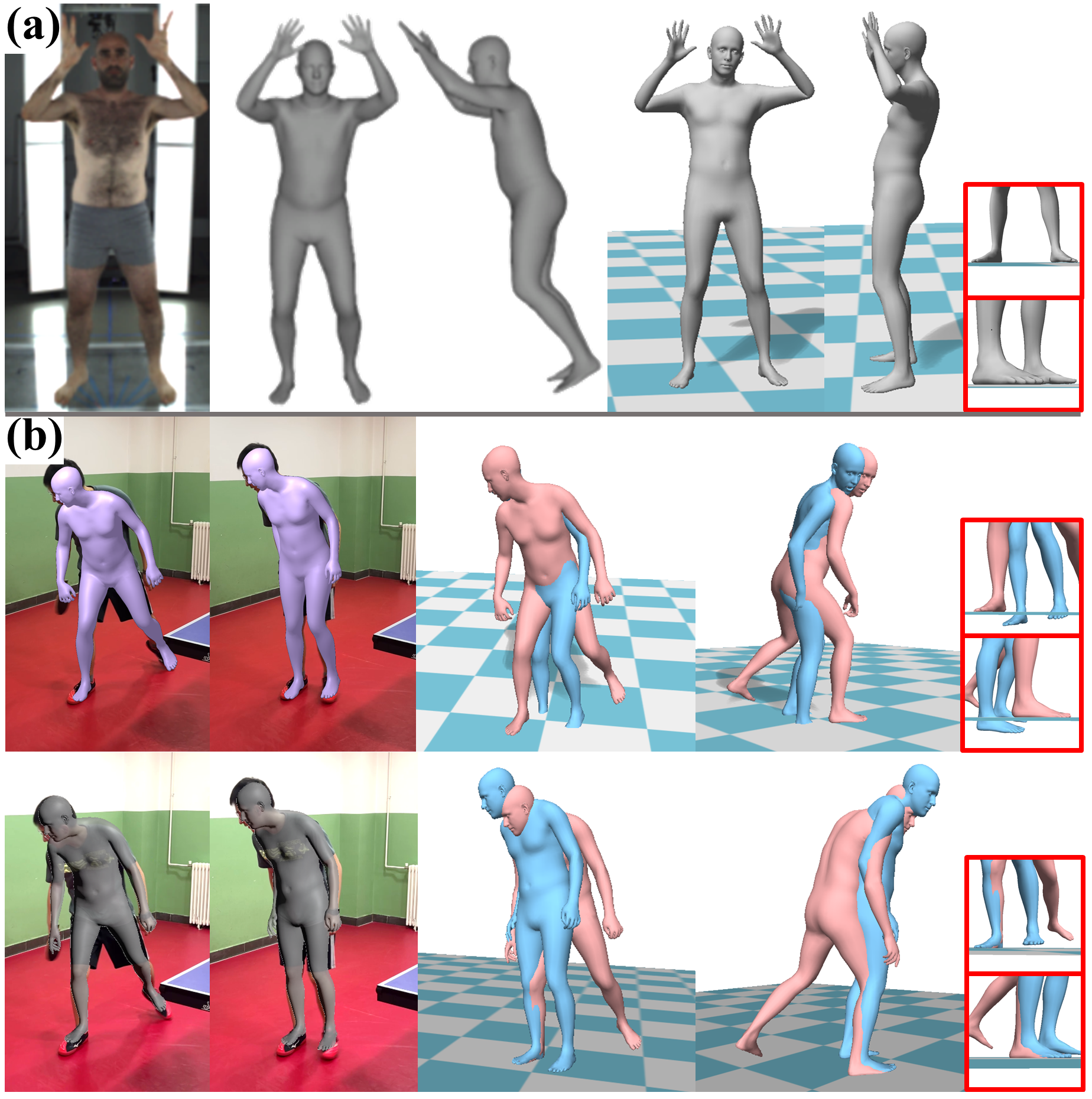

Fig 5. Qualitative Comparison. (a)left: LearnedGD, right: Ours, (b)top: PyMAF-X, down: Ours.

Technical Paper

Demo Video

Related Work

Citation

@article{zhang2023realtime,

title={Real-time Monocular Full-body Capture in World Space via Sequential Proxy-to-Motion Learning},

author={Zhang, Yuxiang and Zhang, Hongwen and Hu, Liangxiao and Yi, Hongwei and Zhang, Shengping and Liu, Yebin},

journal={arXiv preprint arXiv:2307.01200},

year={2023}

}