CVPR 2012

A Data-driven Approach for Facial Expression Synthesis in Video

Kai Li1,2, Feng Xu1, Jue Wang3, Qionghai Dai1, Yebin Liu1

1Department of Automation, Tsinghua University 2Graduate School at Shenzhen, Tsinghua University 3Adobe Systems

Abstract

This paper presents a method to synthesize a realistic facial animation of a target person, driven by a facial performance video of another person. Different from traditional facial animation approaches, our system takes advantage of an existing facial performance database of the target person, and generates the final video by retrieving frames from the database that have similar expressions to the input ones. To achieve this we develop an expression similarity metric for accurately measuring the expression difference between two video frames. To enforce temporal coherence, our system employs a shortest path algorithm to choose the optimal image for each frame from a set of candidate frames determined by the similarity metric. Finally, our system adopts an expression mapping method to further minimize the expression difference between the input and retrieved frames. Experimental results show that our system can generate high quality facial animation using the proposed data-driven approach.

[Paper] [Code] [IEEE Trans. MM]

Overview

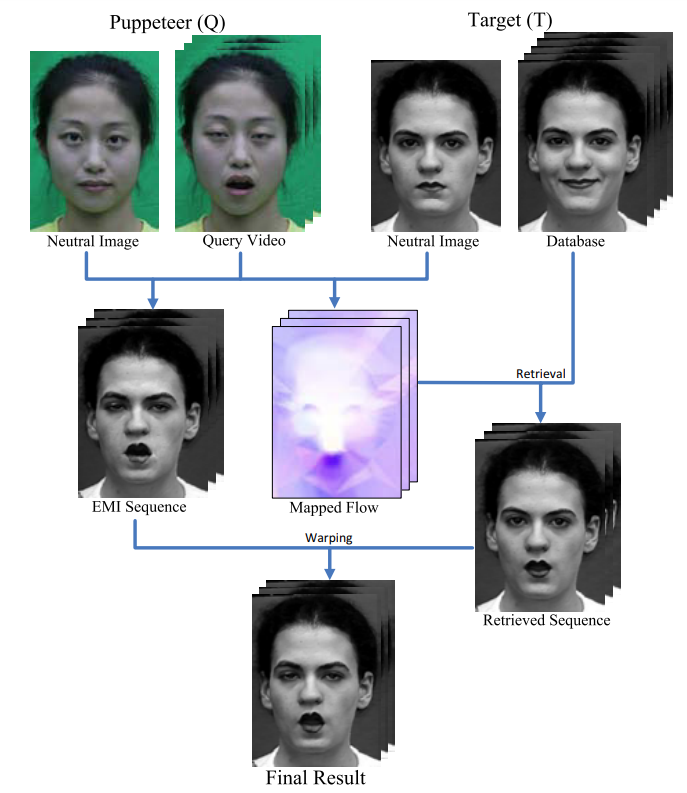

Fig 1. System overview. The optical flow between each query frame and its neutral face is first mapped to the target person, which is used to perform retrieval from the database. Meanwhile, the neutral image of the target person is warped to generate an EMI sequence with the query performance. Finally, the retrieved sequence is refined by the EMI to synthesize the final result.

Video

Citation

Li, Kai, et al. "A data-driven approach for facial expression synthesis in video." 2012 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2012.

@inproceedings{li2012data,

title={A data-driven approach for facial expression synthesis in video},

author={Li, Kai and Xu, Feng and Wang, Jue and Dai, Qionghai and Liu, Yebin},

booktitle={2012 IEEE Conference on Computer Vision and Pattern Recognition},

pages={57--64},

year={2012},

organization={IEEE}

}