SIGGRAPH 2023 (Journal Track)

AvatarReX: Real-time Expressive Full-body Avatars

Zerong Zheng, Xiaochen Zhao, Hongwen Zhang, Boning Liu, Yebin Liu

Department of Automation, Tsinghua University

NNKosmos Technology

Abstract

We present AvatarReX, a new method for learning NeRF-based full-body avatars from video data. The learnt avatar not only provides expressive control of the body, hands and the face together, but also supports real-time animation and rendering. To this end, we propose a compositional avatar representation, where the body, hands and the face are separately modeled in a way that the structural prior from parametric mesh templates is properly utilized without compromising representation flexibility. Furthermore, we disentangle the geometry and appearance for each part. With these technical designs, we propose a dedicated deferred rendering pipeline, which can be executed in real-time framerate to synthesize high-quality free-view images. The disentanglement of geometry and appearance also allows us to design a two-pass training strategy that combines volume rendering and surface rendering for network training. In this way, patch-level supervision can be applied to force the network to learn sharp appearance details on the basis of geometry estimation. Overall, our method enables automatic construction of expressive full-body avatars with real-time rendering capability, and can generate photo-realistic images with dynamic details for novel body motions and facial expressions.

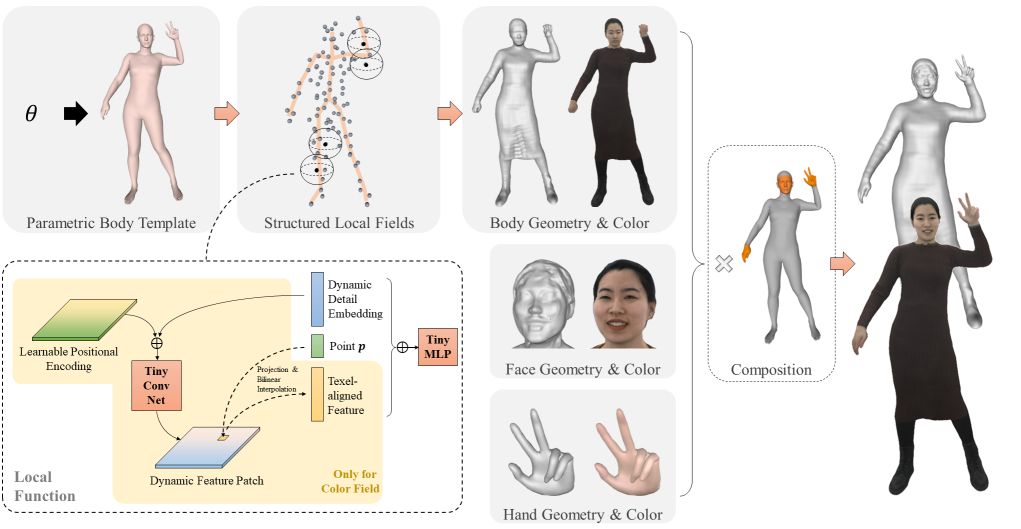

Fig 1. Illustration of our compositional avatar representation. Our expressive avatar is composed of three parts, namely the major body, the hands and the face. For clarity, we only illustrate the body representation here. The core of our body representation is a set of structured local implicit fields, and we enhance their detail representation power by introducing an explicit dynamic feture patch for each field.

Results

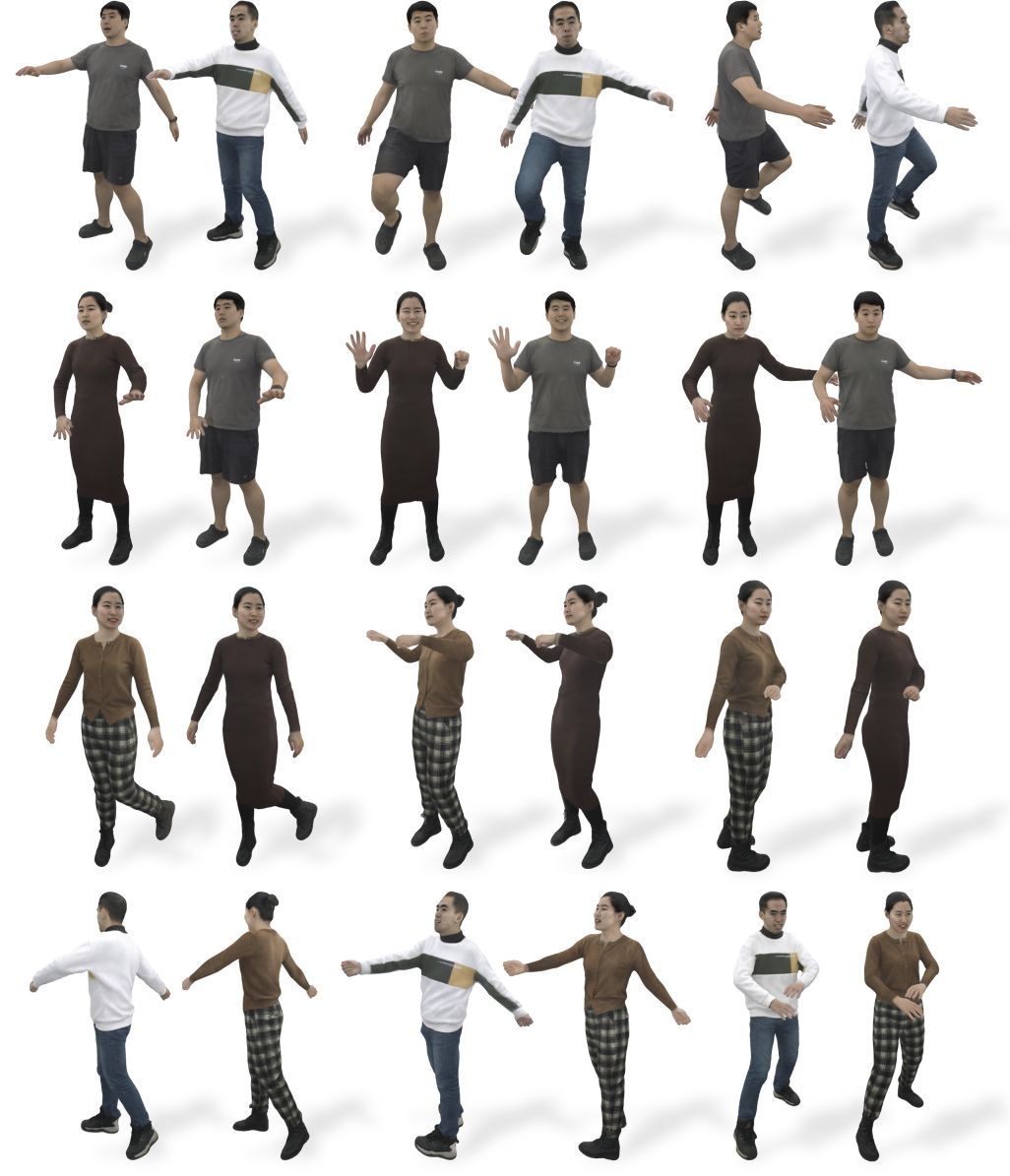

Fig 2. Example animation results produced by our method. Our method can learn photorealistic full-body avatars that provides full controllability over the body pose, hand genstrue and the face expression all together. Moreover, it can be rendered in real time without compromising image quality.

Fig 3. Qualitative results on novel pose synthesis. We train our network for four identities and show the novel pose synthesis results, where two different subjects perform the same motions and expressions.

Technical Paper

Demo Video

Citation

Zerong Zheng, Xiaochen Zhao, Hongwen Zhang, Boning Liu, Yebin Liu. "AvatarRex: Real-time Expressive Full-body Avatars". ACM Trans. Graph. (Proc. SIGGRAPH) 2023

@article{zheng2023avatarrex,

title={AvatarRex: Real-time Expressive Full-body Avatars},

author={Zheng, Zerong and Zhao, Xiaochen and Zhang, Hongwen and Liu, Boning and Liu, Yebin},

journal={ACM Transactions on Graphics (TOG)},

volume={42},

number={4},

articleno={},

year={2023},

publisher={ACM New York, NY, USA}

}