Real-time Sparse-view Multi-person Total Motion Capture

Yuxiang Zhang, Zeping Ren, Liang An, Hongwen Zhang, Tao Yu, Yebin Liu

Department of Automation and BNRist, Tsinghua University

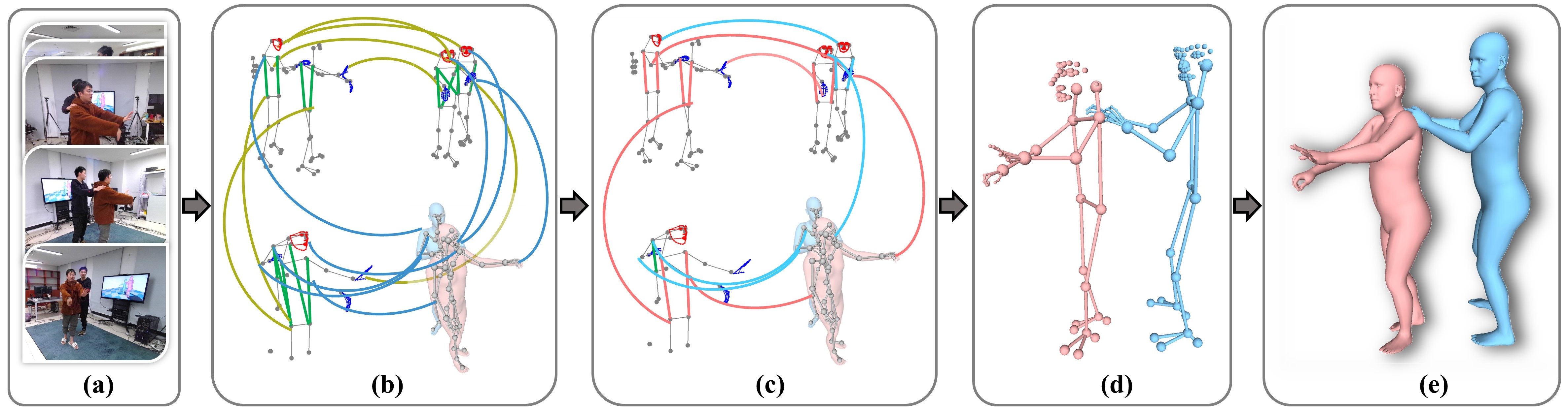

Fig 1. Our real-time multi-person total capture system with 5 RGB inputs.

Abstract

Real-time multi-person total motion capture is one of the most challenging tasks for human motion capture. The multi-view configuration reduces occlusions and depth ambiguity yet further complicates the problem by introducing cross-view association into consideration. In this paper, we contribute the first real-time multi-person total motion capture system under sparse views. To enable full body cross-view association in real-time, we propose a highly efficient association algorithm, named Clique Unfolding, by reformulating the widely used fast unfolding algorithm for community detection. Moreover, an adaptive motion prior based on human motion prediction is proposed to improve the SMPL-X fitting performance in the final step. Benefiting from the proposed association and fitting methods, our system achieves robust, efficient and accurate multi-person total motion capture results. Experiments and results demonstrate the efficiency and effectiveness of the proposed method.

Demo Video

Overview

Fig 2. Method overview. (a) Input multi-view RGB streams with full-body pose estimations. (b) Build the 4D association graph. (c) Solve the partition of association graph through our clique unfolding algorithm. (d) Reconstruct 3D skeletons. (e) Optimize parametric SMPL-X model.

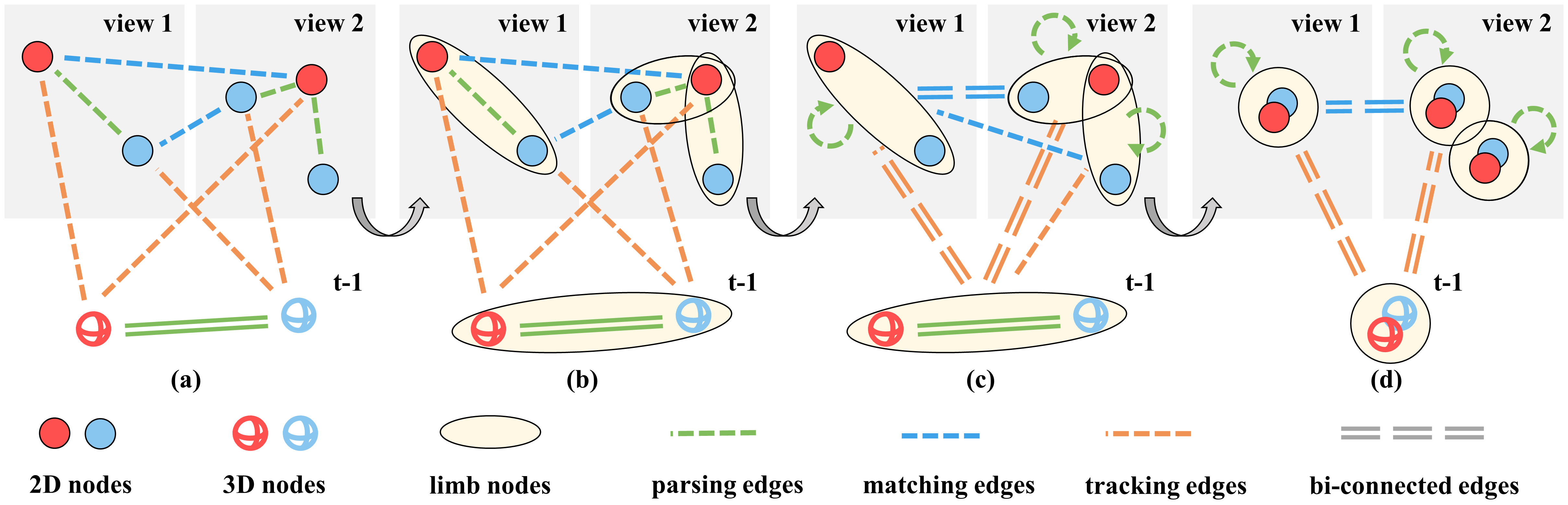

Fig 3.Illustration of limb subgraph reconstruction. (a) Input association graph. (b) Merge nodes by parsing edges(green dotted line) to form limb nodes. (c) Establish edges for limb nodes by merging edges of previous graph. Double-dotted line means both members of this limb node are connected with the counterpart one in the other limb node. Single-dotted line means only one member of the limb node is connected. (d) Discard these single-connected edges and average the bi-connected edges to finally build the limb subgraph.

Results

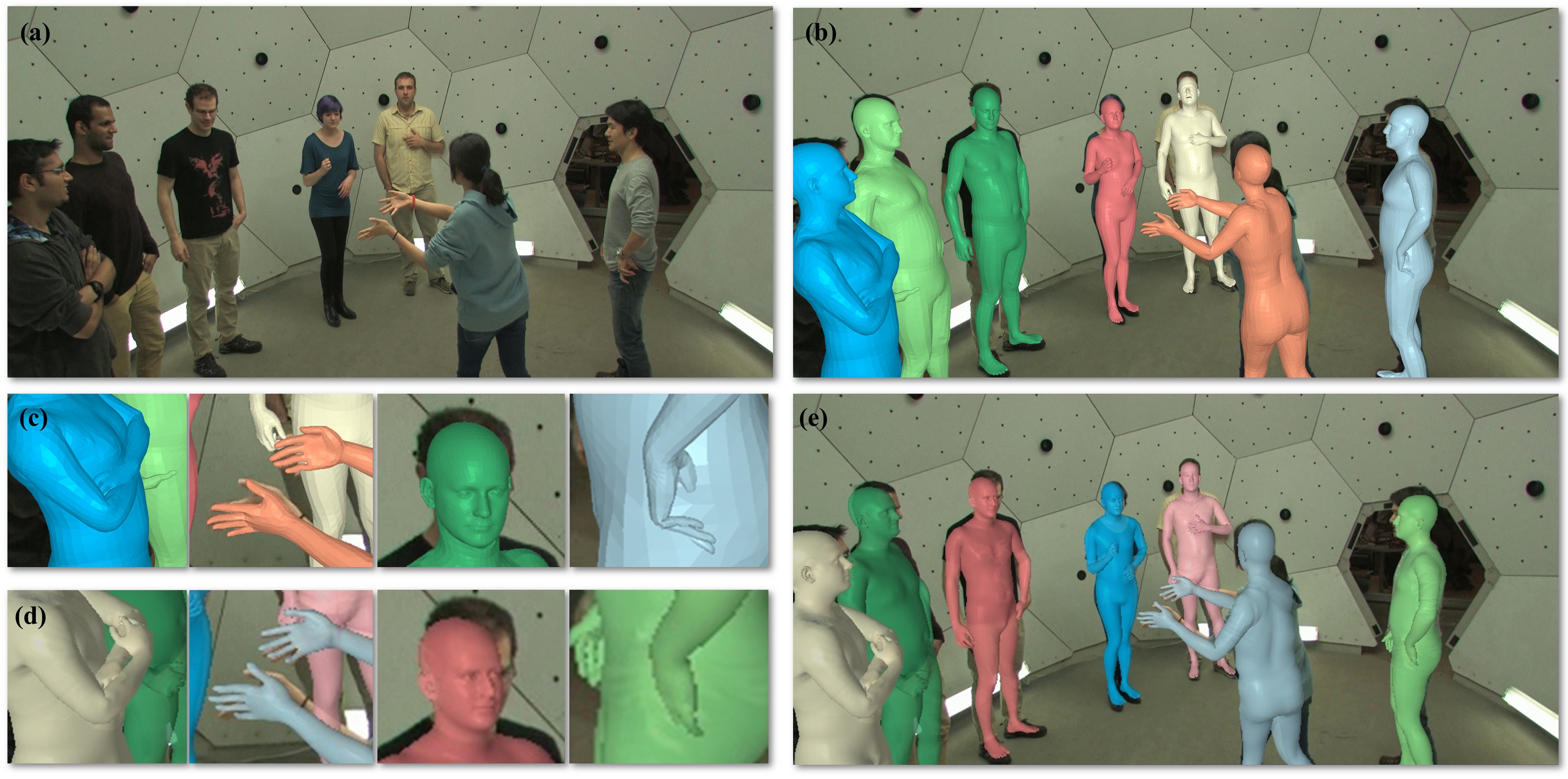

Fig 4. Fitting results by our system using 5 views. From left to right are input reference images, parametric model alignment, facial and hand alignment and 3D visualization from a novel view, respectively.(a)Results on Shelf dataset. (b)(c) Results on our real-time system. (d) Results on CMU dataset.

Fig 5. Qualitative comparison against LightCap. (a) is the input from CMU dataset(8 views used). (b,c) is the result of our method. (d,e) is the result of LightCap from their paper. Our system is comparable with the offline method or even better.